R libraries

Install, if necessary, and load necessary libraries and set up R session. If you are running the docker container or using an instance on the orchestra platform, then you should have these libraries installed already.

Introduction

Principal component analysis (PCA) provides a method for visualizing the distinguishing patterns or information in a dataset. Additional tutorials will provide a more in-depth review of the method, this is just a short and sweet “taster” vignette.

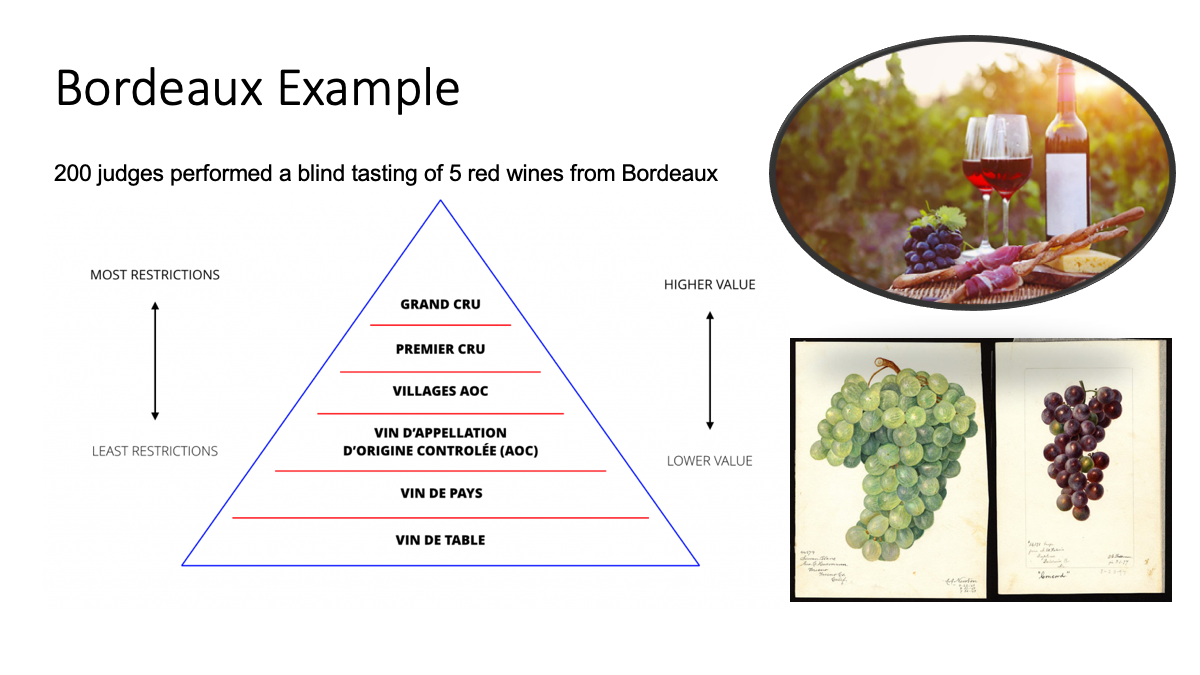

Dataset: Winetasting in Bordeaux

We will use a very simple example dataset of wine-tasting where 200 judges performed a blind tasting of 5 red wines from Bordeaux, scoring each as boring, mediocre, good or excellent.

The dataset is available in the ade4 R package:

data(bordeaux)In French wines, table wine has fewer legal restrictions and is cheaper, whereas Cru and Grand Cru wine have more regulation standards and therefore are more expensive.

Figure 1: The bordeaux dataset provides scores from 200 judges in a blind tasting of five different types of red wine from the Bordeaux region of south western parts of France. The judges scored wines as excellent, good, mediocre and boring.

data(bordeaux)

bordeaux## excellent good mediocre boring

## Cru_Bourgeois 45 126 24 5

## Grand_Cru_classe 87 93 19 1

## Vin_de_table 0 0 52 148

## Bordeaux_d_origine 36 68 74 22

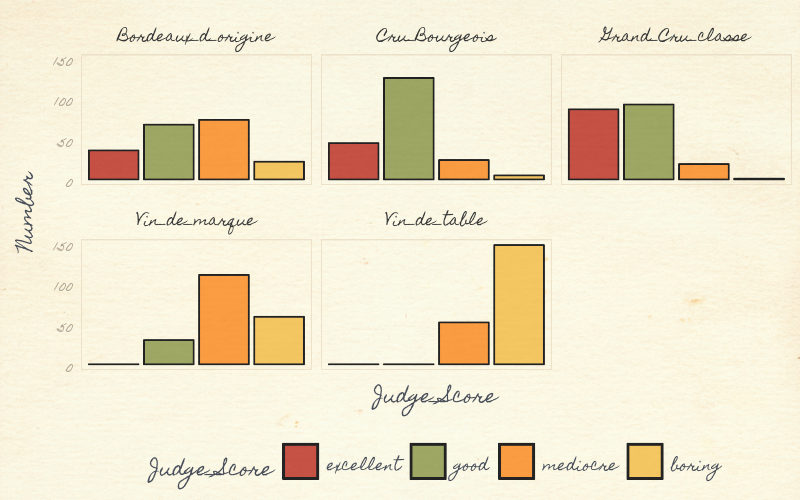

## Vin_de_marque 0 30 111 59Data visualization is important and always a good first step with any dataset, even simple ones. We visualize this data, with a simple barplot, to see the distribution of excellent, good, mediocre and boring scores for each wine. The Grand Cru Classe received more “excellent” scores than the Vin_de_table (table wine).

#devtools::install_github("gadenbuie/ggpomological")

#install.packages("magick")

df<-bordeaux %>%

tibble::rownames_to_column(var="Wine") %>%

reshape2::melt(.,variable.name="Judge_Score",

value.name="Number")

p<- ggplot(df,

aes(Judge_Score, Number, fill=Judge_Score))+

geom_bar(color="black",stat = "identity") +

facet_wrap(~Wine, nrow = 2)+

scale_fill_pomological()+

theme_pomological("Homemade Apple", 12)+

theme(axis.text.x=element_blank(),

legend.position = "bottom",

legend.key = element_rect(colour = "black"))

paint_pomological(p,res = 110) %>%

magick::image_write("barplot-painted.png")

Figure: Visualizing the bordeaux dataset using ggplot and ggtheme pomological

To make it visually interesting we used the ggpomological graphics ggtheme, to display these wine data. The pomology ggtheme and palette evokes the style and colors of old watercolor prints of pomology; a branch of botany that studies and cultivates fruit ggpomological.

Running PCA

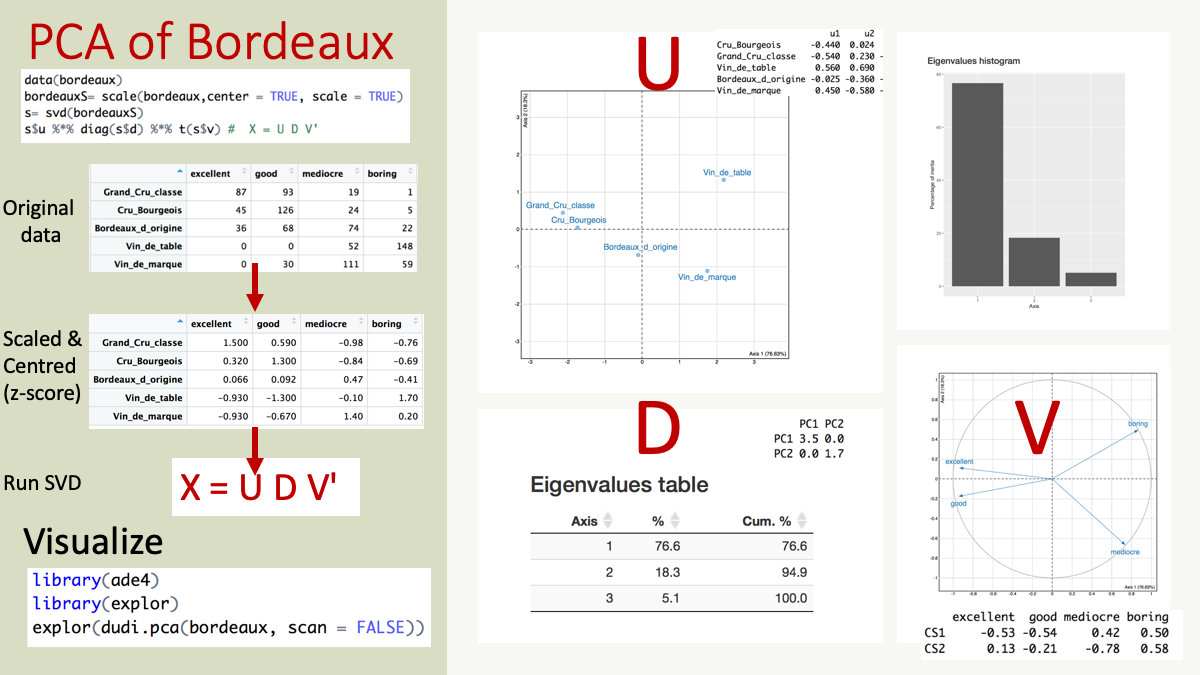

We will show two quick approaches to run PCA as seen in Figure 3.

1. SVD of scaled and centered data

We will scale the data with center=TRUE, scale=TRUE,

## excellent good mediocre boring

## Cru_Bourgeois 0.3148138 1.2550661 -0.8434205 -0.6893123

## Grand_Cru_classe 1.4746543 0.5934498 -0.9752049 -0.7549611

## Vin_de_table -0.9278724 -1.2711053 -0.1054276 1.6576321

## Bordeaux_d_origine 0.0662766 0.0922253 0.4744240 -0.4103050

## Vin_de_marque -0.9278724 -0.6696359 1.4496290 0.1969464and apply singular value decomposition (SVD) to the z-score scaled data, X. The SVD was discovered over 100 years ago independently by Eugenio Beltrami (1835–1899) and Camille Jordan (1838–1921). Martin and Porter (2012) wrote a very readable and enjoyable article on the history and application of SVD entitled ‘The Extraordinary SVD’.

SVD matrix decomposition of X will generates 3 matrices, U, D, V where \[X = U D V'\]

## List of 3

## $ d: num [1:4] 3.50 1.71 9.00e-01 8.09e-17

## $ u: num [1:5, 1:4] -0.4404 -0.5408 0.5595 -0.0253 0.4471 ...

## $ v: num [1:4, 1:4] -0.535 -0.539 0.422 0.496 0.13 ...## [,1] [,2] [,3] [,4]

## [1,] 0.3148138 1.2550661 -0.8434205 -0.6893123

## [2,] 1.4746543 0.5934498 -0.9752049 -0.7549611

## [3,] -0.9278724 -1.2711053 -0.1054276 1.6576321

## [4,] 0.0662766 0.0922253 0.4744240 -0.4103050

## [5,] -0.9278724 -0.6696359 1.4496290 0.1969464D - singular values.

U - the left singular vectors

V - the right singular vectors The rank of X is equal to the number of non-zero singular values, the length(D), is equal to the columns of matrices U,V. The max rank is the number of rows or columns of X, whichever is lower. In this 5x4 dimension matrix, the max rank is 4.

## [1] 4## [1] TRUE## [1] TRUEIn statistics and machine learning we talk about finding a low-rank approximation of a matrix, that is a few vectors that approximate the matrix, which can be done by subsetting to a few eigenvectors, or setting eigenvalues to zero.

Finally, the left (U) and right (V) singular vectors \[ U D V'\] provide a new subspace for the rows and columns of X, respectively.

## [1] TRUE## [1] TRUEEigenvalues

The diagonal matrix \[diag(s$d)\] contain the singular values, which are always \[>0\].

diag(s$d)## [,1] [,2] [,3] [,4]

## [1,] 3.50159 0.000000 0.0000000 0.000000e+00

## [2,] 0.00000 1.711166 0.0000000 0.000000e+00

## [3,] 0.00000 0.000000 0.9004307 0.000000e+00

## [4,] 0.00000 0.000000 0.0000000 8.087712e-17The singular values s$d are square roots of eigenvalues. The eigenvalues which tell how much variance is captured by each component. The maximum number of eigenvalues is the min of the number of row or columns in X.

## [1] 76.63 18.30 5.07 0.00The first component (PC1, horizontal) captured almost 76.6% of the variance or information in the data and the second component has 18.3%, cumulatively, a plot of PC1 and PC2 would include 95% of the variance in the data. Therefore by droping PC3 and PC4, we have a lower rank representation of X.

Eigenvector

Eigenvalues and eigenvectors come in pairs. The columns in U and V are the eigenvectors associated with the singular values in D. The rank is 4, we have four eigenvectors and eigenvalues.

Eigenvalues are ranked, such that the first component PC1 explains the most variance, the second explains the next and so forth. The vectors are orthogonal, uncorrelated. Each tells a different aspect about the data.

So lets look at the singular vectors. The first component, vector PC1 captures almost 77% of the data, but what signal is captures by PC1. Lets look at the first column in the matrices U and V.

The matrix U has the same number rows, representing the 5 wines as the matrix X.

data.frame(rownames(bordeaux), s$u)## rownames.bordeaux. X1 X2 X3 X4

## 1 Cru_Bourgeois -0.44039631 0.02356098 0.77035252 0.188987607

## 2 Grand_Cru_classe -0.54083076 0.22976639 -0.61518608 0.352593045

## 3 Vin_de_table 0.55947146 0.69035944 0.05163321 -0.005444855

## 4 Bordeaux_d_origine -0.02534875 -0.36052462 -0.14842228 -0.710249368

## 5 Vin_de_marque 0.44710436 -0.58316218 -0.05837736 0.579204642The number of rows of matrix V equals the number of columns in matrix X; the judges scores (boring/medicore/ood/excellent)

data.frame(colnames(bordeaux), s$v)## colnames.bordeaux. X1 X2 X3 X4

## 1 excellent -0.5345671 0.1302525 -0.74214304 0.3827479

## 2 good -0.5387736 -0.2070736 0.62362747 0.5271929

## 3 mediocre 0.4215187 -0.7790795 -0.23353575 0.4010215

## 4 boring 0.4962691 0.5772261 0.07598379 0.6440136We will cover more about intepreting PC1, and these scores in the next vignette. But we see PC1 distinguished Boring/medicore from Good/excellent wines.

- ** positive values ** of PC1

- Boring/medicore judge scores (PC1, right singular vector)

- Vin de table (Table Wine) and Vin de marque (Brand Wine) (PC1, left singular vector)

- ** negative values ** of PC1

- Good/excellent scores of the first left singular vectors.

- Cru and Grand Cru wines have have negative values on first right singular vectors. Regional Bordeaux wine (Bordeaux d’origine) is close to the origin.

If a left singular vector has its sign changed, changing the sign of the corresponding right vector gives an equivalent decomposition. Therefore we look at the direction and distance from the origin, aka the separation either side of the origin.

Scores

pc.scores <- s$u %*% diag(s$d)

colnames(pc.scores) <- paste0("PC", 1:ncol(pc.scores))

data.frame(rownames(bordeaux),pc.scores)## rownames.bordeaux. PC1 PC2 PC3 PC4

## 1 Cru_Bourgeois -1.54208750 0.04031674 0.69364907 1.528477e-17

## 2 Grand_Cru_classe -1.89376778 0.39316845 -0.55393244 2.851671e-17

## 3 Vin_de_table 1.95903989 1.18131964 0.04649213 -4.403642e-19

## 4 Bordeaux_d_origine -0.08876093 -0.61691750 -0.13364398 -5.744293e-17

## 5 Vin_de_marque 1.56557632 -0.99788733 -0.05256477 4.684440e-172. Using a PCA function in R

We can perform the same calculation by as calling a PCA function, for example if we call the R function prcomp

prcomp(bordeaux, scale=TRUE, center=TRUE)## Standard deviations (1, .., p=4):

## [1] 1.750795e+00 8.555830e-01 4.502154e-01 4.043856e-17

##

## Rotation (n x k) = (4 x 4):

## PC1 PC2 PC3 PC4

## excellent -0.5345671 0.1302525 -0.74214304 0.3827479

## good -0.5387736 -0.2070736 0.62362747 0.5271929

## mediocre 0.4215187 -0.7790795 -0.23353575 0.4010215

## boring 0.4962691 0.5772261 0.07598379 0.6440136The pc.scores manually calculated above is the same as

prcomp(bordeaux, center = TRUE, scale=TRUE)$x## PC1 PC2 PC3 PC4

## Cru_Bourgeois -1.54208750 0.04031674 0.69364907 7.763558e-17

## Grand_Cru_classe -1.89376778 0.39316845 -0.55393244 1.931347e-16

## Vin_de_table 1.95903989 1.18131964 0.04649213 -1.185617e-16

## Bordeaux_d_origine -0.08876093 -0.61691750 -0.13364398 -2.021094e-17

## Vin_de_marque 1.56557632 -0.99788733 -0.05256477 -1.578670e-16Or we can use the ade4 function dudi.pca. By default dudi.pca uses center = TRUE, scale = TRUE

## Class: pca dudi

## Call: dudi.pca(df = bordeaux, scannf = FALSE)

##

## Total inertia: 4

##

## Eigenvalues:

## Ax1 Ax2 Ax3

## 3.0653 0.7320 0.2027

##

## Projected inertia (%):

## Ax1 Ax2 Ax3

## 76.632 18.301 5.067

##

## Cumulative projected inertia (%):

## Ax1 Ax1:2 Ax1:3

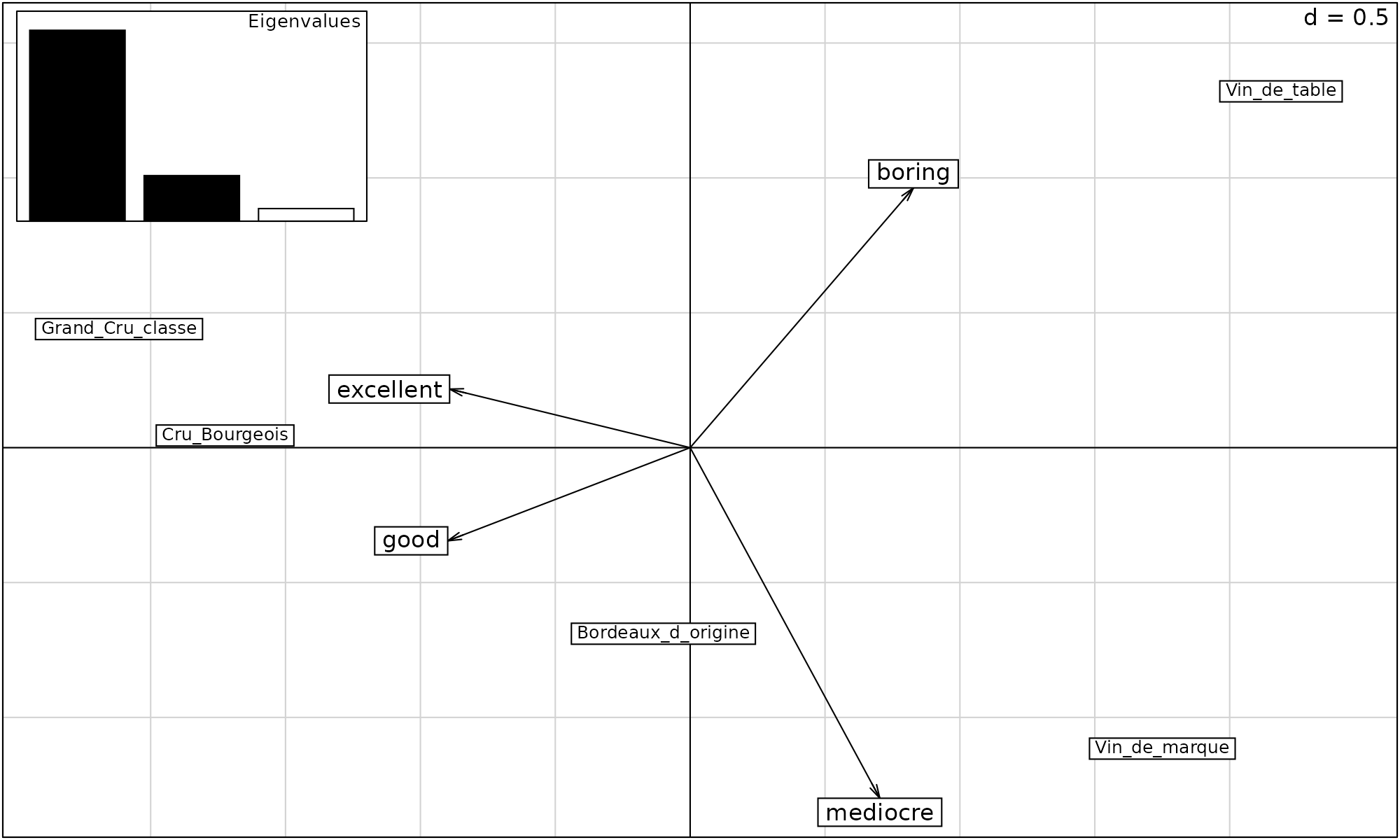

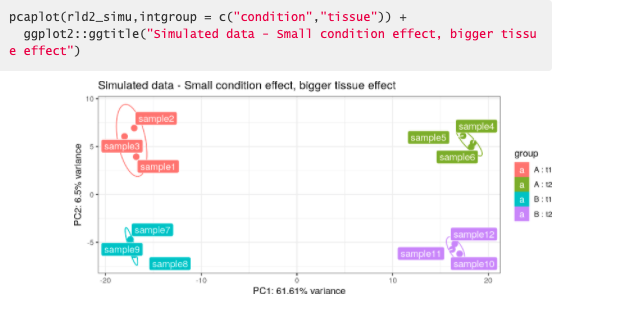

## 76.63 94.93 100.00If we plot the output of bordeaux.pca, we see the first component (horizontal), captures most of the information in the dataset, distinguished Vin de table and Vin de marque from the other wines. Whilst Vin de table and Vin de marque were associated with the scores “boring” and “mediocre”. The Grand Cru and Cru wines were “excellent” and “good”

scatter( bordeaux.pca)

We will learn more about the relationship between SVD and PCA, the different R packages that run PCA, in the second vignette Principal Component Analysis in R. We will apply PCA to genomics data in the 4th vignette of the workshop; PCA example: scRNAseq.

Figure 3: Overview of a PCA of bordeaux dataset

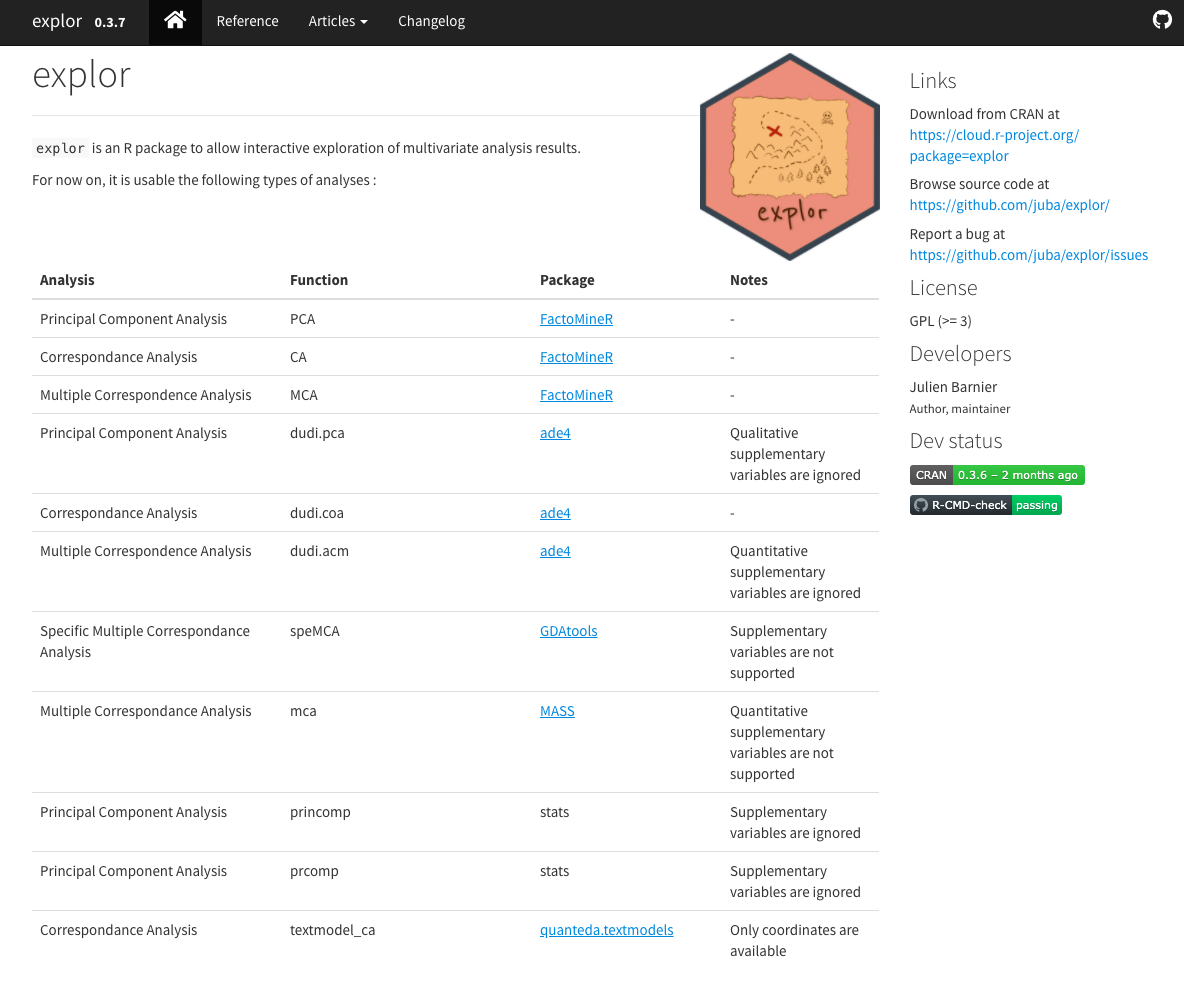

A few resources for visualization of Results; Explor, pcaExplorer

Explor

Julien Barnier’s package explor provides a nice shiny interface to explore matrix factorization results including PCA, Correspondence analysis, or Multiple correspondence analysis and other dimension reduction methods available in R packages ade4, vegan, FactoMineR, GDATools, MASS, stats (prcomp or princomp)

Explor

pcaExplorer

Federico Marini and Harald Binder have create a Bioconductor package called pcaExplorer

PCA of bordeaux dataset

## R version 4.1.0 (2021-05-18)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Ubuntu 20.04.2 LTS

##

## Matrix products: default

## BLAS/LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.8.so

##

## locale:

## [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=en_US.UTF-8 LC_COLLATE=en_US.UTF-8

## [5] LC_MONETARY=en_US.UTF-8 LC_MESSAGES=C

## [7] LC_PAPER=en_US.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=en_US.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] reshape2_1.4.4 tibble_3.1.3 ade4_1.7-17 explor_0.3.9 ggplot2_3.3.5

## [6] dplyr_1.0.7 knitr_1.33

##

## loaded via a namespace (and not attached):

## [1] Rcpp_1.0.7 tidyr_1.1.3 assertthat_0.2.1 rprojroot_2.0.2

## [5] digest_0.6.27 utf8_1.2.2 mime_0.11 plyr_1.8.6

## [9] R6_2.5.0 ellipse_0.4.2 evaluate_0.14 highr_0.9

## [13] pillar_1.6.2 rlang_0.4.11 jquerylib_0.1.4 rmarkdown_2.9

## [17] pkgdown_1.6.1 textshaping_0.3.5 desc_1.3.0 stringr_1.4.0

## [21] htmlwidgets_1.5.3 munsell_0.5.0 shiny_1.6.0 compiler_4.1.0

## [25] httpuv_1.6.1 xfun_0.24 pkgconfig_2.0.3 systemfonts_1.0.2

## [29] htmltools_0.5.1.1 tidyselect_1.1.1 fansi_0.5.0 crayon_1.4.1

## [33] withr_2.4.2 later_1.2.0 MASS_7.3-54 grid_4.1.0

## [37] jsonlite_1.7.2 xtable_1.8-4 gtable_0.3.0 lifecycle_1.0.0

## [41] DBI_1.1.1 magrittr_2.0.1 formatR_1.11 scales_1.1.1

## [45] stringi_1.7.3 cachem_1.0.5 fs_1.5.0 promises_1.2.0.1

## [49] bslib_0.2.5.1 ellipsis_0.3.2 ragg_1.1.3 generics_0.1.0

## [53] vctrs_0.3.8 scatterD3_1.0.0 RColorBrewer_1.1-2 tools_4.1.0

## [57] glue_1.4.2 purrr_0.3.4 fastmap_1.1.0 yaml_2.2.1

## [61] colorspace_2.0-2 memoise_2.0.0 sass_0.4.0